Automatic evaluation in LLM Applications: how LlamaIndex can facilitate the process

Introduction

As language models (LLMs) become increasingly advanced, the need for efficient and precise evaluation of their generated responses grows. Applications must now consider knowledge processing, including the Retrieval-Augmented Generation (RAG) process, to tailor specific applications to their domains.

There are two main evaluation approaches: automatic evaluation and evaluation based on human-prepared reference sets. Each has its advantages and disadvantages.

Evaluation methods

Manual evaluation based on reference set

Created manually reference set evaluation has several advantages, such as knowing the expected system response for specific cases. However, it has significant drawbacks:

- Time-Consuming: Creating manual reference sets requires experts to prepare, verify, and approve each question and answer.

- Costly: Engaging experts incurs costs that can quickly escalate with an increasing number of questions and answers.

- Limited scalability: Maintaining and updating manual reference sets is challenging, with human resources becoming a bottleneck.

- Subjectivity: Evaluations based on reference data can be error-prone and subjective.

- Limited data diversity: Experts may not account for all possible question and answer variants.

Automatic evaluation

Automatic evaluation using LLM-based evaluators enables rapid and scalable, multi-faceted assessment of generated responses. Modern tools (e.g., Vertex, LlamaIndex) offer built-in evaluation functions that integrate models, enabling efficient detection of hallucinations and ensuring the coherence of questions and answers. Automatic evaluation speeds up the assessment process, reduces costs, and minimizes human error, making it invaluable in working with advanced language models.

Evaluation in co.brick

The evaluation problem is relevant at co.brick, where we develop applications for our clients and enhance the CoCo technology. CoCo is an AI platform utilizing generative AI to extract knowledge from technical systems (cloud-native, IIoT).

Automatic evaluation process

The process considers the user's question, the system's response, and the context (selected knowledge nodes). It relies on the following questions to evaluate LLM application performance:

- Faithfulness evaluation: Is the generated response consistent with the context (knowledge nodes)?

- Adequacy evaluation: Do the response and context match the query?

- Context Appropriateness: Which context nodes are used to generate the response?

Other methods and approaches based on detailed guidelines will be analyzed in future tasks.

Why LlamaIndex?

LlamaIndex is an advanced Open Source tool designed for efficient knowledge processing and management in LLM applications. It stands out for its flexibility and versatility, offering built-in evaluation methods for automatic assessment of LLM-generated responses. This helps detect model hallucinations and assess response coherence with the context.

Assuming we have documents (a knowledge base) to use in an application, LlamaIndex allows easy processing of various document types to create knowledge nodes. Many basic usage examples of LlamaIndex are available on Internet, so we will not expand on this topic here. The basic usage is:

documents = SimpleDirectoryReader("data").load_data()

index = VectorStoreIndex.from_documents(documents)Based on indexed data, a search engine can be created:

query_engine = index.as_query_engine()

response = query_engine.query("What does co.brick do?")

print(response)Faithfulness evaluation

Using the FaithfulnessEvaluator from LlamaIndex, we can assess whether the query engine's response matches any source nodes. This is crucial for detecting model hallucinations.

llm = OpenAI(model="gpt-3.5-turbo", temperature=0)

evaluator = FaithfulnessEvaluator(llm=llm)

contexts = [node.node.text for node in response.source_nodes]

eval_result = evaluator.evaluate(query=query, response=str(response), contexts=contexts) print(eval_result.passing)The result is either True or False. This method can be adapted to work on a set of reference or automatically generated questions.

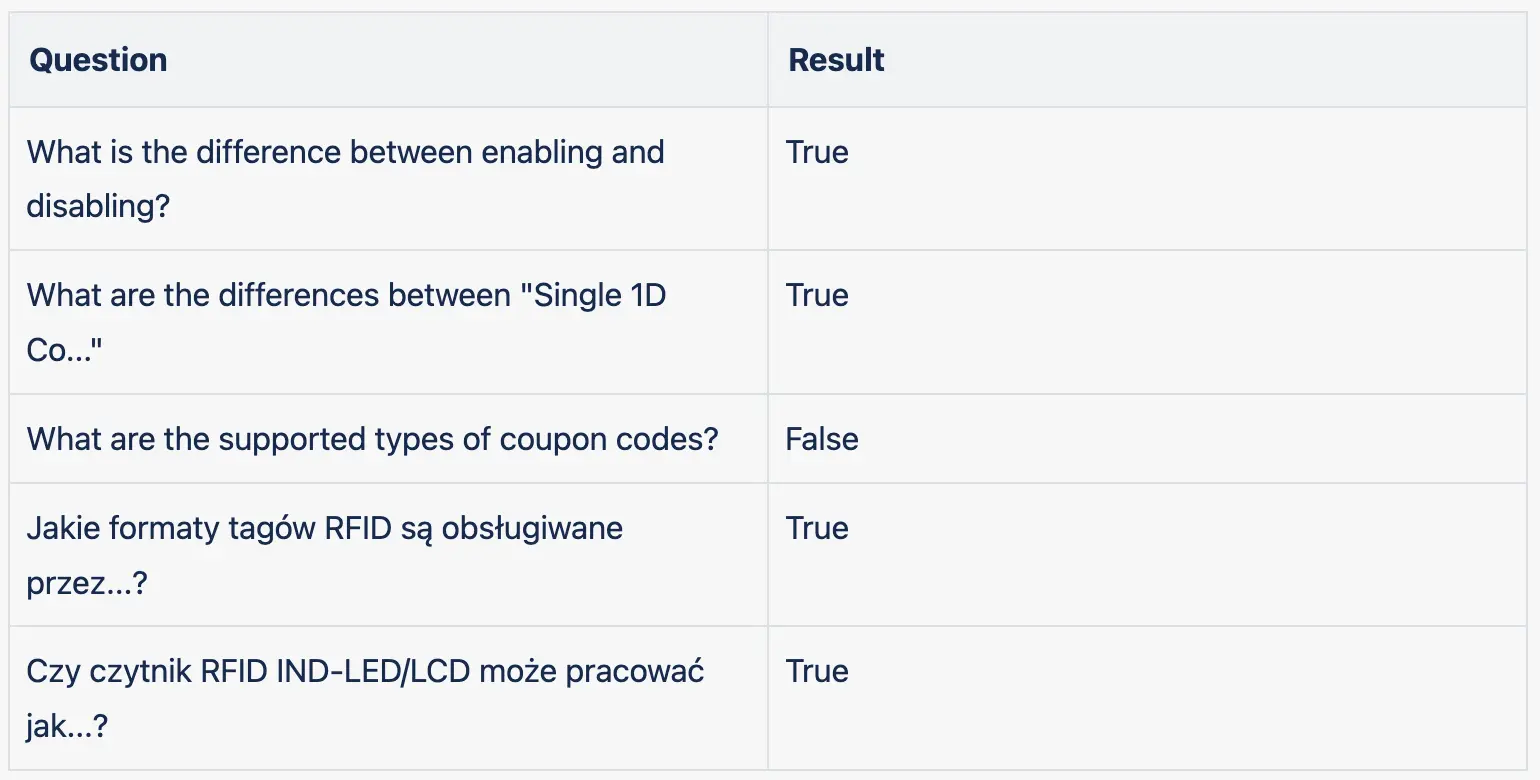

Example results for a series of technical questions (questions were generated in Polish and English):

Query response evaluator

The QueryResponseEvaluator assesses the coherence of the question and answer (excluding context). Usage is similar to FaithfulnessEvaluator:

evaluator = QueryResponseEvaluator(llm=llm) response = query_engine.query(example_query)

contexts = [node.node.text for node in response.source_nodes]

eval_result = evaluator.evaluate_response(query=query, response=str(response), contexts=contexts) print(eval_result.passing)Context relevancy evaluator

Using the RelevancyEvaluator, we can analyze each context node's relevance to the question and answer, essential for evaluating the effectiveness of the RAG process.

query_engine = index.as_query_engine(similarity_top_k=6)

response = query_engine.query(example_query)

contexts = [node.node.text for node in response.source_nodes]

llm = OpenAI(temperature=0, model="gpt-4")

evaluator = RelevancyEvaluator(llm=llm)

eval_source_result_full = [evaluator.evaluate(query=example_query, response=response_vector.response, contexts=[source_node.get_content()]) for source_node in response_vector.source_nodes]

#print(eval_source_result_full)Conclusions

In our analysis, we used LlamaIndex and its evaluators to assess the system's ability to answer user questions. Similar evaluators are likely available in other libraries. Automatic methods rely on LLM evaluators, and the quality of responses depends on the evaluator model. For instance, GPT-3 often produced poor results, whereas GPT-4's evaluations were reliable. Automatic evaluation methods can effectively check if the system is resistant to hallucinations and optimize knowledge base creation processes. However, using these methods involves costs related to model APIs. In context node evaluation, larger models are required. This text does not exhaust the topic of evaluation, and we will continue our work to develop satisfactory automatic evaluation methods for LLM applications. I invite you to implement these methods in your projects and experiment with different LLM models to achieve the best results.

Enjoyed this article? Don't miss out! Join our LinkedIn community and subscribe to our newsletter to stay updated on the latest business and innovation news. Be part of the conversation and stay ahead of the curve.

Transform your business with our expert